- This topic has 25 replies, 2 voices, and was last updated 4 years, 2 months ago by

Vadim Smirnov.

-

AuthorPosts

-

June 26, 2021 at 4:14 am #11550

Hi,

We wanted to test how well the Windows Packet Filter performs, so we installed the Windows Packet Filter 3.2.29.1 x64.msi and compiled the capture tool, and after running it the speed of file transfer (SMB) on shares reduced by around 70-80%! Is there anyway to fix this? is this a bug or..?

June 26, 2021 at 4:20 am #11551Also Shouldn’t the fast I/O that is implemented recently fix this?

We compiled the capture as 64 bit (so not wow64) and the tested windows was windows 10 20h1.

What is causing this problem?

June 26, 2021 at 4:45 am #11553Capture tool was primarily designed for testing/debugging purposes and it utilizes relatively slow file stream I/O, e.g. each intercepted packet is delayed for the time needed to write it into the file resulting the increased latency and decreased bandwidth.

If you need a high speed traffic capture solution you have to implement in-memory packet caching and write captured packets into the file using dedicated thread instead of doing this in the packet filtering one. Or you could use use the memory mapped file and let Windows cache manager to do the rest 🤔

P.S. And yes, Fast I/O may improve the performance even further…

June 26, 2021 at 6:27 am #11554We removed the file writing part, but the problem still persist. so right now it just gets the packet from driver, and then passes it back to kernel.

Although i should mention that our connection speed is 1Gb/s, and using capture even after removing the file writings will reduce it to 200Mb/s when moving files from shares?

Can you give it a try as well by removing the file writing and see how much it reduces the speed?

June 26, 2021 at 6:36 am #11557The sample code you tested was designed for the demo purposes only. If you are interested in the performance tests you can check this post.

June 26, 2021 at 7:09 am #11558I actually read that post too, and compiled the exact code and the result is still the same, smb file transfer speed gets reduced by 80%-70%.

The reason i tried the newest version was that i was hoping maybe direct IO would fix this issue, since they didn’t implement direct IO in that post, but seems like it doesn’t.

Although I’m not sure how that post is claiming to reach 90MB/s when i cant reach 30-40? Maybe it didn’t try doing file transfer through share?

Are you getting the same result as me?

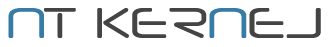

June 26, 2021 at 7:25 am #11563I’ve just taken dnstrace sample (dumps DNS packets and passes everything else without any special handling) and tried to copy one large file from the system running dnstrace to another one. Here is the result with dnstrace running and without:

.June 26, 2021 at 7:36 am #11565

.June 26, 2021 at 7:36 am #11565The only idea I have is that you have some other third-party software (which includes NDIS/WFP filter) and somehow results the software conflict… Try to setup two fresh Windows connected over the switch or direct cable.

P.S. To ensure, retested with reversed copy direction and the result is still the same…

June 26, 2021 at 8:18 am #11566I guess one reason could be because of how powerful the underlying CPU is. I tied the dnstrace and it got reduced from 80MB/s to 40MB/s. But it is weird that it doesn’t get reduced at all in your case, are you sure you are copying from a shared folder on a network? mine is a freshly installed windows 10 (VM).

I want to measure how much overhead this project has if we use it to send every packet to user to check (block or not), and then send those that are OK based on user mode decision. So this dnstrace seems to do exactly this right? because based on reading its code, it is receiving packets from kernel and sending those that are OK (which in this case is all of them) back to kernel, right?

June 26, 2021 at 8:21 am #11568Can you also try to use the code that was shared on the blog that you mentioned to see if you still don’t get any reduced performance?

June 26, 2021 at 8:36 am #11569I guess one reason could be because of how powerful the underlying CPU is.

It is easy to verify, just start Task Manager (or Resource Monitor) when you copy the file and check the CPU load with and without dnstrace running. If your CPU peaks even without dnstrace then no wonder if you get the throughput degradation when add extra work…

I want to measure how much overhead this project has if we use it to send every packet to user to check (block or not), and then send those that are OK based on user mode decision. So this dnstrace seems to do exactly this right?

Yes, it filters (takes from the kernel to user space and then re-injects back into the kernel) all packets passed through the specified network interface, selects DNS responses, decodes and dumps.

Can you also try to use the code that was shared on the blog that you mentioned to see if you still don’t get any reduced performance?

Well, dnstrace is good enough to test with. Also, if you would like to test with fast I/O option you can take sni_inspector. It also filters all the traffic for the selected interface, but instead DNS responses selects and dumps the SNI field from the TLS handshake.

June 26, 2021 at 8:44 am #11570Also, if you would like to test with fast I/O option you can take sni_inspector.

Isn’t fast IO implemented in dnstrace as well? i saw some fast IO stuff in its code. also, is fast IO only available if we compile it as 64 bit?

It is easy to verify, just start Task Manager (or Resource Monitor) when you copy the file and check the CPU load with and without dnstrace running. If your CPU peaks even without dnstrace then no wonder if you get the throughput degradation when add extra work…

Yep, it seems like the bottleneck is because of CPU intensive works, the cpu I’m testing with is core i7 5500, even tho its not high end its what most of the ordinary users use, and obviously we can’t tell our clients to just upgrade and get a high end CPU to fix this issue if we purchased this project. Is there anyway to fix this? does dnstrace fully implement fast I/O?

What CPU are you testing with?

June 26, 2021 at 9:05 am #11573Some samples use fast i/o, others don’t, but it is very easy to switch the sample between fast and old model by changing one line of code:

For the Fast I/O:

auto ndis_api = std::make_unique<ndisapi::fastio_packet_filter>(For the ordinary I/O:

auto ndis_api = std::make_unique<ndisapi::simple_packet_filter>(And yes, fast i/o does not support WOW64…

the cpu I’m testing with is core i7 5500

It is fast enough and in my post we have discussed above I have tested much older models. But you have mentioned that you use VM, while I tested on the real hardware over real 1 Gbps wire cable.

June 26, 2021 at 9:47 am #11574I tested on the real hardware over real 1 Gbps wire cable.

Are you sure you are copying from the share through your network connection? because the picture you posted above is copying the file with the rate of 300MB/s, which is not possible with the network that has a 1Gbs speed cable (notice the Byte and bit).

To get that 300MB/s you have to have a 10Gbs network which is not that common for simple networks.

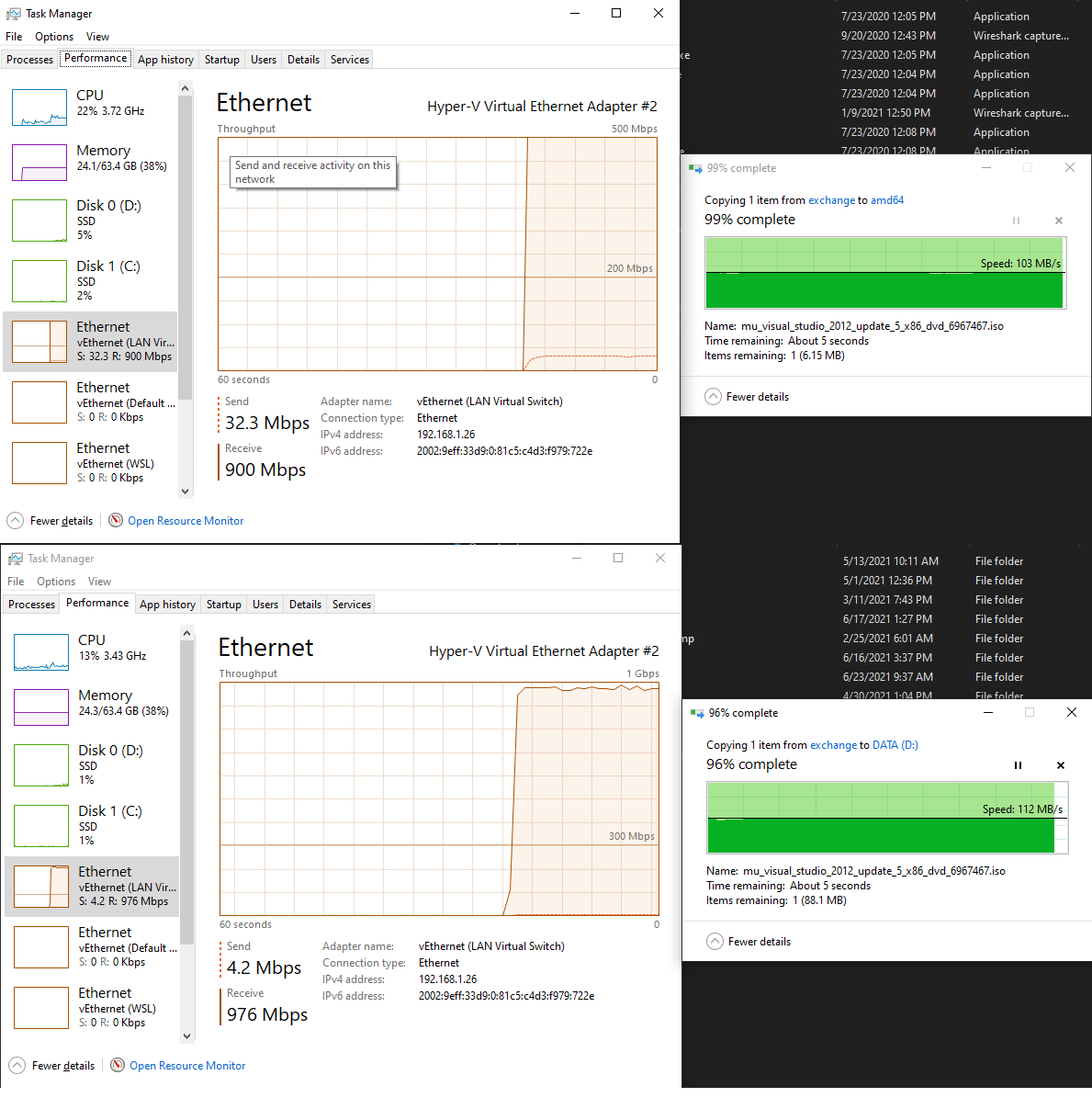

June 26, 2021 at 10:13 am #11577Yes, sorry, it is my fault… Saturday evening 😉… In that case traffic has passed over virtual network . Here is the test over the cable:

You can notice some bandwidth degradation (900 Mbps vs 976 Mbps without filtering) and extra CPU load.

-

AuthorPosts

- You must be logged in to reply to this topic.